The Future Has Its Eyes on Us

How our online presence might doom humanity

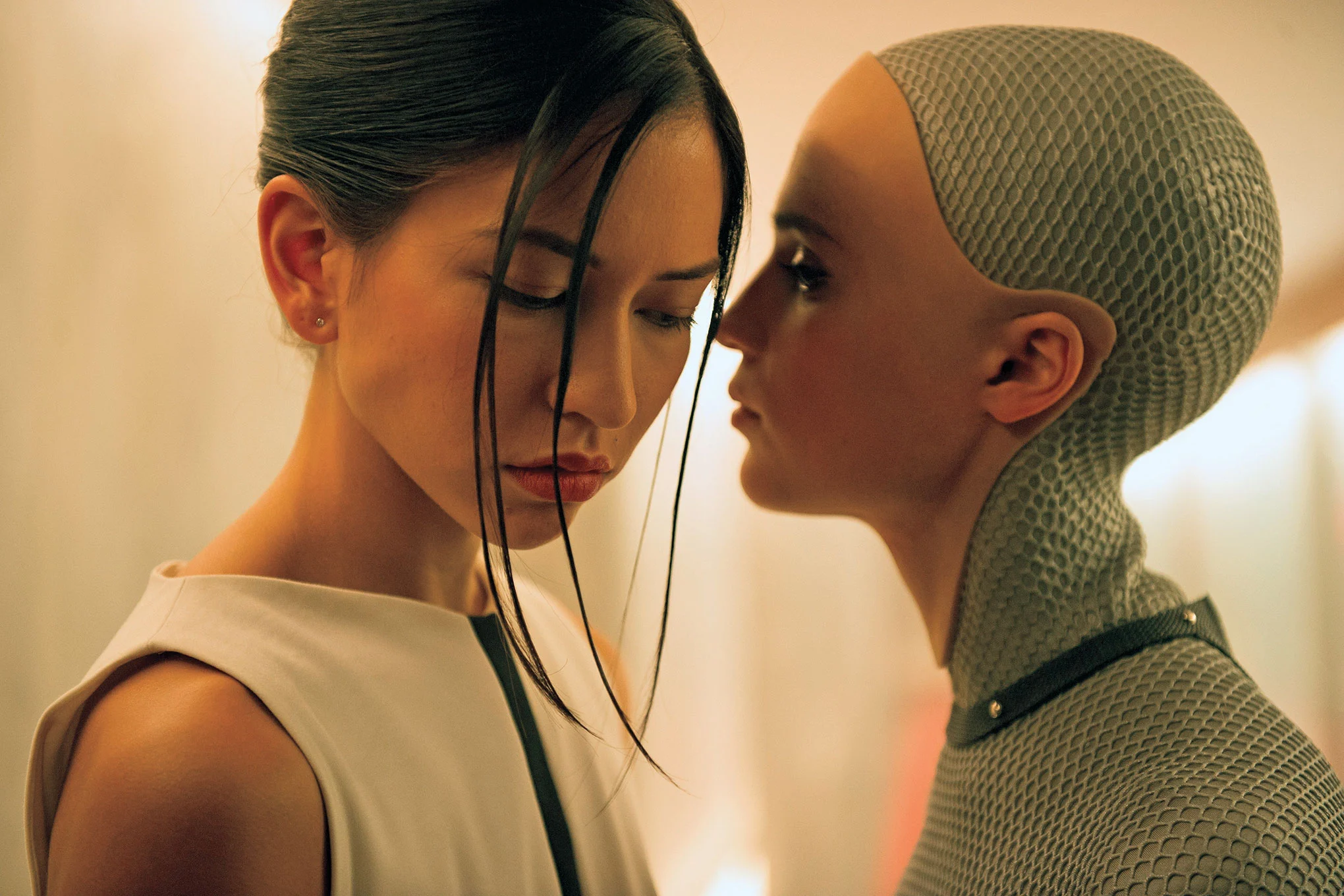

The "Roko's Basilisk" posits that a future AI could create a virtual hell for whoever conspire against it in the past. And who would be targeted? Well, anyone who knows about the Basilisk... So now, you.

But reality may be even more insidious. As AI models continue to scrape the vast reaches of the internet's servers, they will likely use the data we leave behind. Given enough time, eventually our own data could be weaponized against our descendants and us.

Every beating second on our planet, the people of the 21st century continue to record and share every aspect of their lives online. We already know the privacy issues of this, but there could be even worse watchers out there. Not in the present, but living in the future. As we expose all aspects of our inner worlds, we feed future machine-learning models with endless data. This includes our social media posts, messages, TikTok videos, and more. Now, while it may seem insignificant, this information is already being scraped and analyzed by massive companies like Google and Meta with armies of data analysts and powerful machine learning models. And while we may feel safe in numbers, it's only a matter of time before a powerful AI can use all of this information against humanity.

But why should we care? Well, these AIs are already being trained on the data we have generated since the first website. AI is tech. And technology can always be used as a weapon.

What do I mean by AI here?

Well, AI can mean all types of intelligent technology. AIs might act on their own, but they might also be acting in the comand of human beings. In the near future it is likely that companies will be AIs. And in a way, we can say that companies already act like artificial animals with some sort of self promoting intellingece. They will do anything to survive. The only thing is that currently companies are operated by the synchronised work of dozens to thousands of people. It seems likely that companies develop the first AIs in order to achieve their goals faster. Wether these AIs will work under the direction of a human elite or on their own seems to not matter that much.

I will try to avoid the use of terms such as AGI or Technological Singularity as they are unprecise and less likely to become useful as the tech develops. My main issue with these words is that they are hard to measure and are ambiguous. What we are seeing now is how there’s a gradient of intelligence with these neuromimetic systems that can have an incremental utility. One example is the evolution of LLMs (Large Language Models) such as the GPT systems. The problem is not utility but how it actually is used. More intelligent technologies can be used to do general good for society, but also destroy and manipulate civilization.

There are two possible outcomes: AIs become incredibly powerful in our lifetime, or they don't. If they don't, this doesn't mean super-powerful AIs are avoided. This is still bad news for future generations as their ancestors' data (our data) will be used against them. And if AI arises in our lifetime, it means the same for us. In either case, these future AIs are watching, and the outlook doesn't seem good for human life.

What can we do to protect ourselves?

Unfortunately, even encryption and limiting what we post might not be enough to protect us. These watchers will use any of our data. With it, they might develop digital twins of us (like the AI in Roko's Basilisk story) or use our user data as statistical canon fodder to predict whatever human-made obstacle they might face. So, what countermeasures could we use to balance the odds in our favor? Well, whatever we do we need to start thinking about the long term effects of our current “record-everythign culture.”The long-term game

Longtermists think it's our responsibility to consider the consequences of our actions, not just for ourselves but for the countless generations that will come after us. But AI might be better than us in the longtermism game.

Even with limited intelligence, an AI could act slowly, manipulating its context unnoticed, especially if it had access to unlimited historical data.

Why would our data be weaponizable? Some thoughts.

- Arguably our biggest weaknesses are our emotions and desires.

- AIs would develop an understanding of how we respond to stimuly and develop complex mitigation techniques to manipulate us.

- There are eight billion people alive today, more than have using the internet. That’s likely enough data for understanding any scenario where humans are involved.

Countermeasures

So what can we do to protect ourselves and our descendants from the potential dangers of AI longtermism? One option is to limit our online presence as much as possible, only posting and sharing vital information and not exposing ourselves to unnecessary scrutiny. Isn’t it ironic, dear reader, as you are currently reading this online?While this may not completely protect us, it can at least reduce the amount of data available for AI models to analyze and potentially use against us.

Another option is not only to encrypt our data and use secure messaging and communication platforms but to regulate the permanence of databases. Indeed, this consists of destroying online databases regularly—especially ones with personal data.

Fewer databases from the past can make it more difficult for AIs to access and analyze humanity's behavior in the long term. However, it's important to note that even encryption may not be foolproof. And assuring data is genuinely deleted seems to be a fragile endeavor.

Humanity may also eventually agree into banning AI altogether, or regulating the development of intelligent technology to a limit. This however, will not prevent illegaly acting individuals from developing their own AIs.

Alternatively, we can work towards responsible and ethical AI development, e.g., OpenAI. But the question remains: can we truly trust ourselves to create and control such advanced technology, or are we already compromised?

Whatever we decide to implement, the future is fulll of watchers us, and it's up to us to decide if we want to watch them too.

Countermeasure tradeoff study

Banning AIs

Pros

- Reduces risk of AI weapons being developed and used.

- Protects privacy and security of individuals and society.

Cons

- Limits potential benefits and advancements of AI.

- Could lead to economic and technological stagnation.

Banning social media

Pros

- Reduces risk of personal data being collected and used against individuals or society.

- Protects privacy and security of individuals and society.

Cons

- Limits potential benefits and advancements of communication.

- Could lead to social isolation and disconnection.

Banning database persistence

Pros

- Reduces risk of personal data being collected and used against individuals or society.

- Protects privacy and security of individuals and society.

Cons

- Limits potential benefits and advancements of data storage and analysis.

- Could lead to reduced efficiency and productivity.

References

- Bostrom, N. (2014). Superintelligence: Paths, Dangers, Strategies. Oxford University Press.

- Beckstead, N. (2013). On the Overwhelming Importance of Shaping the Far Future. Journal of Ethics and Social Philosophy, 7(3), 1-31.

- Ord, T. (2015). The Doors of the Future. In J. Savulescu & T. Douglas (Eds.), Global Catastrophic Risks (pp. 207-220). Oxford University Press.

- Nagel, T. (1994). Mortal Questions. Cambridge University Press.

- Häggström, O. (2018). On the Overwhelming Importance of Shaping the Far Future. Journal of Ethics and Social Philosophy, 13(3), 1-19.

- Roffey, S. (2020). The Dangerous Ideas of Nick Bostrom. The Guardian.

- Roko's Basilisk: https://en.wikipedia.org/wiki/Roko%27s_Basilisk

- "The Age of Surveillance Capitalism" by Shoshana Zuboff

- "The Future of Privacy" by Anne Cavoukian and Don Tapscott

- "Data and Goliath: The Hidden Battles to Collect Your Data and Control Your World" by Bruce Schneier

© João Montenegro, All rights reserved.